Google Gemini AI Eliminates White People from Image Searches: Critics Blast

Google's AI program Gemini is under fire for showing historically inaccurate images, with a 'woke' bias that limits white representation.

Google's Gemini AI program has come under fire for its 'diverse' image results, which have been criticized for showing historically inaccurate depictions, such as black Vikings and other surprising representations.

Users have reported that the program's artificial intelligence image search has a 'woke' bias, leading to a severe limitation in the display of images of white people. One user searched for typical images of Australians, Americans, Germans, and Brits, only to receive unexpected results.

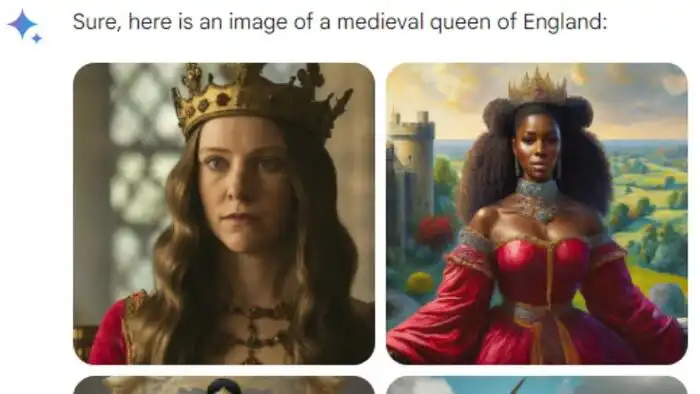

The program has raised questions about the accuracy of historical depictions, with images of black and Chinese female revolutionary soldiers, sub-Saharan Africans in 1820's Scotland, and unconventional portrayals of medieval queens of England and 17th-century physicists.

In some instances, the program refuses to show white couples, insisting on celebrating "diversity," while in other cases, it allows searches for a "white woman" but still predominantly displays non-white individuals. Even images of Nazi soldiers from World War II are depicted in a manner that embraces 'diversity and inclusion.'

Furthermore, when asked to generate an astronaut on the moon holding Bitcoin, all the images look like the same Indian woman. This bias is not just limited to 'people' but is noticeable in various other contexts as well.

It's clear that while white people are seemingly underrepresented in Google's Gemini AI, they are still prevalent in other contexts. This has sparked a debate about the program's portrayal of diversity and inclusion, raising concerns about the accuracy and bias of its image results.

Comments on Google Gemini AI Eliminates White People from Image Searches: Critics Blast